AI Coding Agents Belong in the IDE

"It's like if I were trying to give you directions, but you just drove your car into the third floor of this building.” - engineer using coding agents

I think coding agents need to be in the IDE.

Our team previously built an AI powered junior developer from 2023-2025 that lived in GitHub issues, and we recently moved it into the IDE.

At a high level coding agents work like this. A developer can ask the agent to do something like “integrate a new pricing tier in my landing page”. Then the agent will loop over the following steps:

Search the codebase to understand how to solve the problem

Select some files to make code changes to, and then make edits to the code

Validate the output

Why IDE agents work better

So why did Cline (agent in VSCode) take off and why is Devin moving into the IDE? 1

And why did we pivot to “Cursor for JetBrains IDEs”?

Throughout 2024, we realized a couple of things while building an AI pull request creator.

Developers are extremely lazy. (This is a compliment2)

When we deployed Sweep into enterprise, validating the PR was really hard. Getting code deployed to production involves manual testing, and great CI is a luxury.

It’s hard to make an agent that’s smarter than the underlying model you’re using.

The AI IDE workflow is really nice for a couple of reasons. You can offload “finding the right files” to the developer’s current open files in their editor. This is better context than even the best search.

We thought we could overcome this with great search. In an ideal world, if you take the developer’s request couple it with the exact files they want to change, you can make the perfect code change.

In practice, in order to get our changes merged, we needed to write changes that fit what the developer expects.

It’s similar to getting promoted. If your boss wants x, even if y is better, sometimes doing x might be the better for you as an employee.

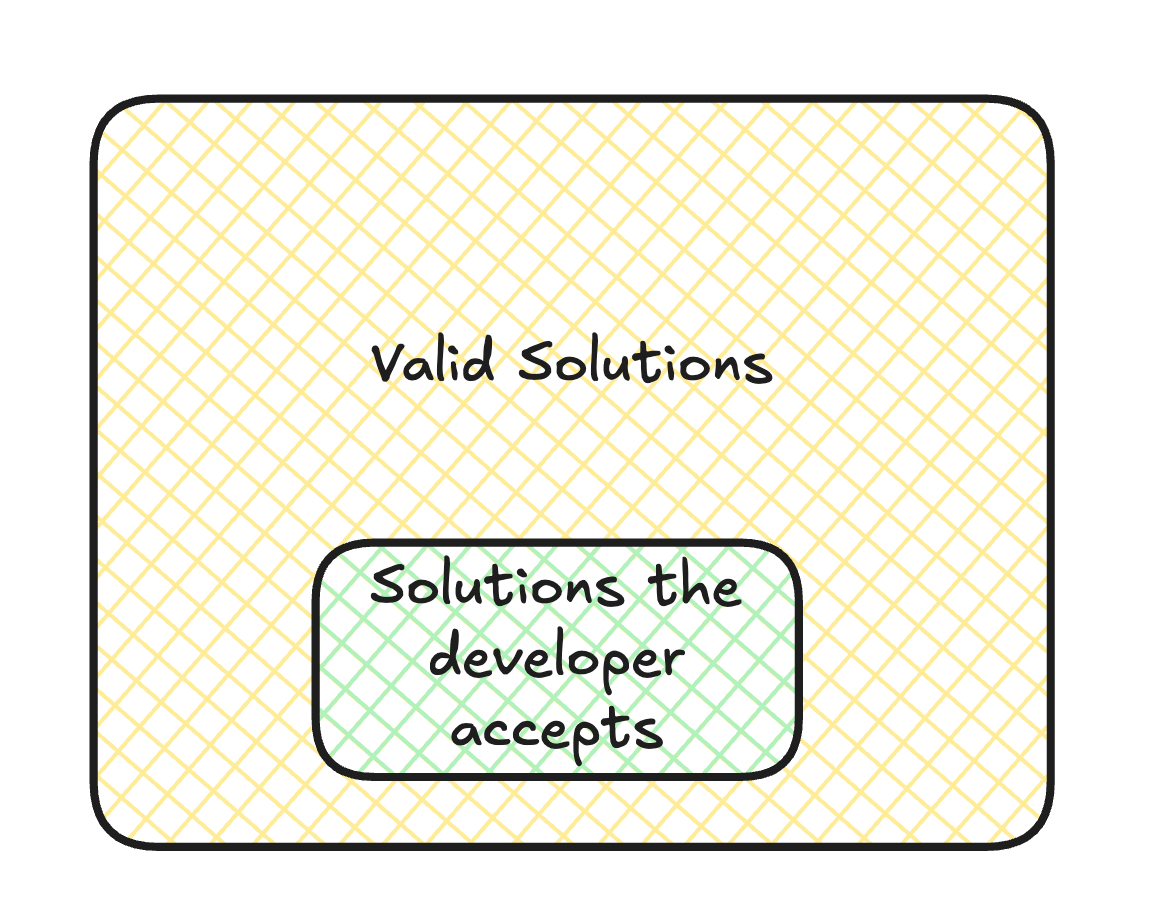

Often we would look at what Sweep did and it would be a reasonable solution, but it wouldn’t be accepted by developers.

This would be fine if acceptance rates were higher, but we ran into another problem.

Search (1) and Planning/Editing (2) are solvable, and you can get this to be really great. But as long as IDEs are around, an external agent’s context will always be second class to an IDE agent.

Will the IDE still be around?

Well, won’t we replace humans + IDEs with agentic SWEs?

I think this is happening with small-scale apps like “build me a calorie counter app / snake game / todo-list”. AI app builders are really good at this, so it would be reasonable to expect that they just keep getting better.

Similar to AI in chess and competition programming, they should eventually surpass human-level performance.

My main counterpoint is that in enterprise software, testing and validation is a bigger bottleneck than you think.

For example, if I were writing a new feature for Slack, the task of testing/validating the change would extremely challenging for computer agents. It’s not as straightforward to validate progress in enterprise software as it is in chess.

Unit tests

Unit testing would be a natural solution. We could have the AI generate it’s own unit tests, and then run them until they succeed. We thought “why not use GitHub actions to run the entire test-suite in a loop?”

We found that great CI/CD is a luxury. If you spin up a pytest suite for a small codebase, it works well. You can run the entire suite in 5 seconds.

However this breaks down for bigger codebases, where it could take hours for the full suite to run. A simple test can take 2 minutes and running the entire suite is a nightly task.

And in many cases - you might need to deploy to staging in order to truly test the code.

How do you iterate in this scenario? and is it justified to block a real developer’s testing for an AI agent’s?

The Last-mile problem and Failure UX

As AI coding assistants like Cursor and Windsurf got even better, the bar changed.

It moved from “can the agent write plausible code” to “can the agent be more productive than a human engineer using Cursor?”.

At least in the next year, I don’t think so.

Trying a task with Devin takes tens of minutes while using Windsurf, Cursor, or Sweep takes seconds. This shouldn’t matter because you can parallelize tasks with multiple Devins, and the ability of the AI in should be greater than that of a human.

In practice, developers struggle to juggle multiple coding tasks at once, and the AI isn’t better at testing than a human is.

This is because there’s an intent recognition gap, where agents have trouble choosing between “technically-correct” solutions and “mergeable-to-production” solutions.

Its faster for developers to poke a fast agent, see what it generates, then test it than to write a complex spec for an AI agent that attempts to one-shot it.

As soon as the agent can’t one-shot the problem, the developer needs to go to the IDE.

If that happens often, developers end up preferring to stay in the IDE where they can manually takeover instead of re-prompting a long-running AI agent.

I think that the IDE will evolve to become the home for coding agents. It’s already preconfigured to run code, and it’s easier to bring context like Slack and Jira into the IDE than it is to bring everything into a new web or GitHub app.

As an aside: JetBrains does build some of the world’s best IDEs, so we’re starting there.